Building Web Cache Cluster using LVS

Introduction

Efficient web cache systems can greatly reduce network traffic, lower the response time of web service and the loading of web servers. However, if the cache server is overloaded so that it cannot process requests in time, it will increase the response time of service requests. Therefore, it's very important to have scalability in cache systems, when the load that the system receives is increasing, the whole system can be scaled up to have higher processing capacity of caching service.

Single server system usually doesn't have good scalability. For example, if we want to build a web cache service that can handle several gigabit/sec traffic in ISP or backbone network, SUN Enterprise 10000 server cannot meet this throughput requirement. Thus, it's an efficient way to build scalable web service using clusters of commodity PC servers, it's also a way of highest performance/price ratio.

Web cache cluster can be either near the client side as (transparent) proxy cache to speed web access for clients, or near the server side as cache accelerator to take load away from web servers.

Architecture

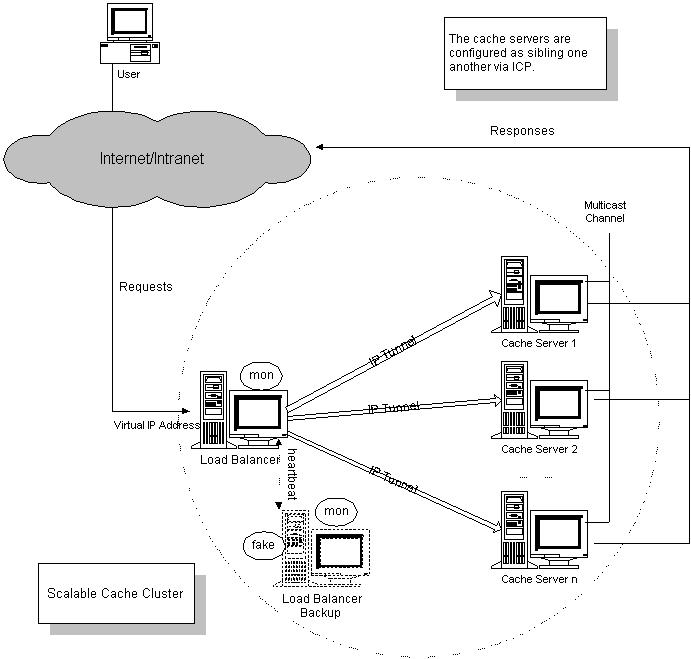

The system architecture of web cache cluster using LVS is illustrated in the following figure.

- Load Balancer: it usually uses LVS/TUN or LVS/DR for high scalability

- Cache Server Array: it performs web object caching services

IPVS load balancer generally uses LVS/TUN method to build scalable cache cluster, when cache servers may be deployed at different places (for example, cache servers as proxy cache may be installed at the places near Internet backbone), load balancer and cache server array may not be in a physically network. When cache server array and IPVS load balancer are in one physical network, LVS/DR method is recommends for higher scalability.

When LVS/TUN or LVS/DR is adopted, load balancer only dispatches requests, and cache servers return response data to clients directly. If request objects are not hit in local cache, cache server will get the objects from source directly. We can see that in this transaction, load balancer just dispatches one request, cache server sends request to source server and get response directly, then returns data to client directly. If LVS/NAT approach is used in proxy cache cluster, all these four flows have to go through load balancer, thus load balancer would be bottleneck. Therefore, LVS/TUN and LVS/DR methods are very effective for building scalable cache cluster.

Cache server array usually run Squid to cache web objects. Since cache server may store objects in storage, which requires write operation, it's good to use local disks at cache servers to improve I/O access speed. Squid cache server array may use multicast channel and ICP (Internet Cache Protocol) to exchange information. When an object isn't hit in local disk, this cache server may use ICP to query if the other cache servers have this requested object. If hit, cache server will get a copy of this object from nearby cache server directly, this helps improve response time and save network traffic.

Configuration Example

Conclusion

| |

"Building Web Cache Cluster using LVS" is an LVS Example related stub. You can help LVSKB by expanding it |